# KNIME服务器管理指南

# 介绍

本指南详细介绍了KNIME Server的配置选项。

如果要安装KNIME Server,则应首先查阅《 KNIME Server安装指南》 (opens new window)。

有关配置KNIME WebPortal的管理选项,请参阅《 KNIME WebPortal管理指南》 (opens new window)。

有关从KNIME Analytics Platform连接到KNIME Server或使用KNIME WebPortal的指南,请参考以下指南:

其他资源也是《 KNIME服务器高级设置指南》 (opens new window)。

# 发行说明

KNIME Server 4.11是4.x发行版的功能发行版。使用KNIME Server 4.10的所有客户端将继续不受限制地使用KNIME Server 4.11。

| 要了解当前正在运行哪个版本的KNIME Server,可以 在WebPortal上查看“ 管理”页面 (opens new window)。 | |

|---|---|

# 新的功能

有关包含新的Analytics Platform 4.2功能的列表,请参见此处 (opens new window)。

突出的新功能是:

- 改进的OAuth身份验证(设置指南) (opens new window)

- 执行器组(新功能) (opens new window)

- 执行器预订(新功能) (opens new window)

- 在AWS上自动缩放(AWS指南) (opens new window)

- 切换到Apache Tomcat(通过TomEE)(发行说明) (opens new window)

还提供了KNIME Server 4.11的 (opens new window)详细变更 (opens new window)日志。

# 切换到Apache Tomcat

在以前的版本中,KNIME Server的应用程序服务器组件基于Apache TomEE(Apache Tomcat的Java Enterprise Edition)。现在,在新的KNIME Server 4.11中,将TomEE替换为标准的Apache Tomcat。

与切换到标准Apache Tomcat一致,现在不鼓励使用旧的EJB挂载点连接到KNIME Server,而支持较新的REST实现。迁移到REST挂载点非常简单。用户使用现有的EJB挂载点登录后,将立即提示您单击即可切换到REST。与EJB相比,REST提供了许多好处-特别是在性能和稳定性方面。

使用的Tomcat版本是9.0.36。请注意,对于不愿切换到Tomcat的现有客户,我们仍然提供基于Apache TomEE的KNIME Server安装程序。它已更新到最新版本,TomEE 8.0.3。

# 通过Qpid执行

早期版本的KNIME Server使用RMI在应用程序服务器和KNIME Executor之间建立连接。RMI现在已由基于Apache Qpid的嵌入式消息队列代替。诸如消息请求作业执行之类的事件不是通过应用程序服务器与执行器之间直接通信,而是通过消息队列传递。

Qpid技术与KNIME Server安装程序捆绑在一起,因此不需要其他设置。所有新安装的KNIME Server安装都配置为默认使用Qpid。另外,KNIME Server安装程序对执行器的knime.ini文件进行了各种调整,以简化与Qpid的连接。

请注意,Qpid仅支持与应用程序服务器在同一主机上运行的单个KNIME Executor。如果您要运行多个分布式KNIME执行器,仍然需要设置RabbitMQ。与以前的版本不同,Executor不会与服务器一起自动启动,而是必须单独启动。《KNIME服务器安装指南》 (opens new window)中介绍了必要的步骤

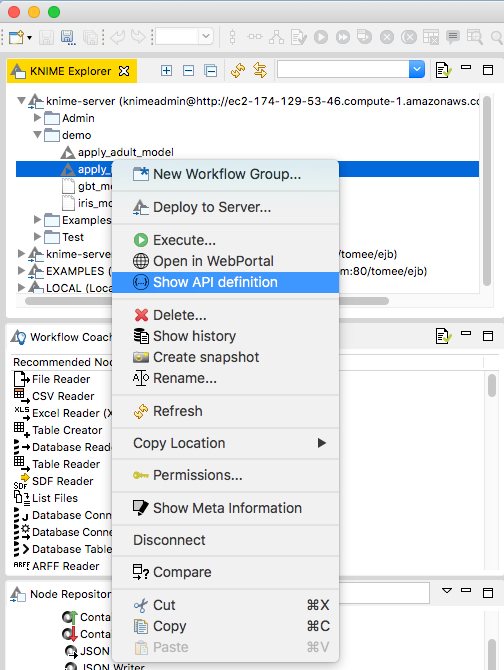

# 切换到REST挂载点

从KNIME Server 4.11发行版开始,现在不鼓励使用旧的EJB挂载点连接到KNIME Server,而支持较新的REST实现。如果是基于Tomcat的KNIME服务器,这将是唯一有效的连接方法。即,在Apache Tomcat上运行的KNIME服务器不再支持EJB。

我们已经非常容易地迁移到REST挂载点。只需使用现有的EJB挂载点登录,就会出现提示,您可以单击一下即可从其切换到REST。与EJB相比,REST提供了许多好处-特别是在性能和稳定性方面。

切换到REST之后,您会注意到行为上有些细微的差别。最值得注意的是,当您启动使用Workflow Variables或的工作流程时,我们不再显示弹出对话框Workflow Credentials。现在,可以直接从工作流执行对话框访问这些选项。要到达那里,请右键单击服务器存储库中的工作流程→执行。然后打开Configuration options选项卡。这使您可以输入工作流变量和工作流凭证。

此外,该Configuration options选项卡还允许您为特定工作流程中位于工作流程顶层(即不在组件或元节点内部)的所有Configuration节点设置值。此功能适用于KNIME Analytics Platform节点存储库的“工作流抽象”→“配置”类别中不包含传入连接(包括流变量)的所有节点。

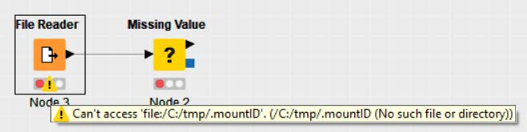

# 通过KNIME工作流程访问本地文件系统

越来越多的KNIME节点(见下文)正在修订中,以使用新的共享框架进行文件访问。在接下来的发行版中将会有更多的节点,因此最终所有KNIME节点的文件访问都将使用共享框架。

在KNIME Server上执行时,首选项控制这些节点是否可以访问KNIME Server Executor的本地文件系统。在此版本中,默认情况下不允许本地文件系统访问(以前是允许的)。

要允许本地文件系统访问(不建议),可以将以下行添加到KNIME Server执行程序使用的自定义配置文件中:

/instance/org.knime.filehandling.core/allow_local_fs_access_on_server=true

此首选项当前会影响以下KNIME节点:Excel Reader(XLS),Excel Writer(XLS),Excel Sheet Appender(XLS),Line Reader。此外,这会影响新文件处理(实验室)类别中的所有节点。其他任何KNIME节点均不受此设置的影响。

# 服务器架构

KNIME Server是一个Java Enterprise Application,KNIME WebPortal是一个标准Java Web Application,都安装在Tomcat应用程序服务器上,蓝色图标位于下图的中间。用户可以登录到服务器,服务器将根据Tomcat提供的任何身份验证源进行身份验证。

KNIME Server的主要任务之一是管理和控制服务器的存储库。上载到服务器的工作流通过服务器应用程序并存储在存储库中,该存储库只是服务器文件系统上的一个文件夹(图中右侧的蓝色圆柱体)。一旦安装了客户端服务器扩展,就可以在KNIME Server中控制对存储的工作流的访问,并且可以从KNIME Explorer中操纵工作流的访问权限。

服务器上的工作流程执行是由KNIME执行器执行的。KNIME Executor是普通KNIME Analytics Platform应用程序的持久无头实例(上图中最左侧的元素)。

重要的是要注意,如果执行程序安装了必需的功能,并且与用于创建工作流程的KNIME Analytics Platform版本相同(或更新),则工作流程只能在服务器上成功加载和执行。

# 服务器配置文件和选项

# KNIME服务器配置文件

KNIME服务器由名为的特定于knime的配置文件配置 knime-server.config。该文件可以在中找到 /config/knime-server.config。可以在运行时更改此文件中定义的大多数参数,这些参数将尽快生效。默认值将用于空白或缺少的配置选项。

本节 KNIME服务器配置文件选项 (opens new window) 中包含的所有配置选项和解释的完整列表。有关适用于KNIME WebPortal的所有配置选项和说明的列表,请参阅 《 KNIME WebPortal管理指南》的 配置文件选项部分 (opens new window)。

# KNIME服务器配置文件选项

在下面,您将找到一个表格,其中包含所有受支持的配置选项(按字母顺序)。其中一些将在后面的部分中更详细地描述。可以在文件中设置选项 /config/knime-server.config。

对于Windows用户:对于服务器配置文件中的路径,请使用正斜杠(“ /”)或双反斜杠(“ \”)。单个反斜杠用于转义字符。

该表的以下批注提供了一些其他信息,这些信息涉及受影响的Executor类型以及更改是在运行时生效还是需要重新启动服务器。

| [ST] | 更改在重启KNIME Server后生效 |

|---|---|

| [RT] | 更改可以在运行时生效 |

| [回覆] | 更改仅影响RMI执行者 |

| [DE] | 更改仅影响KNIME执行器(请参阅此处 (opens new window)) |

Some options can be set as property in the knime-server.config file as well as by defining an environment variable (Env). The environment variable changes will only take effect after a restart of KNIME Server. If the environment variable for an option is set, the property in the configuration file will be ignored.

com.knime.server.admin_email=,,… [RT]A comma separated list of email addresses that will get notified when there is a problem with the server, e.g. the license is about to expire or the maximum number of users has been reached. |

|---|

com.knime.server.canonical-address= [RT]The communication between Executor and server is performed through the server’s REST interface. In case auto-detection of the server’s address doesn’t work correctly, you have to specify the canonical address here, e.g. http://knime-server:8080/. This option is not required if server and Executor are running on the same computer. See also section enabling workflow execution (opens new window) below for more details. Env: KNIME_SERVER_CANONICAL_ADDRESS= |

com.knime.server.config.watch= [ST]If set to true changes to the configuration file are applied immediately without a server restart. Default is false, i.e. all changes will require a server restart. |

com.knime.server.csp-report-only= [RT]Tells the browser to still serve content that violates the Content-Security-Policy and instead display a warning, by setting the Content-Security-Policy-Report-Only header rather than the Content-Security-Policy header (defaults to false). For more information about Content-Security-Policy-Report-Only, please refer to this resource (opens new window). |

com.knime.server.default_mount_id= [RT]Specifies the name of the default mount ID. This is fetched, when clients set up their mount point to the server. Defaults to the server’s hostname. Env: KNIME_SERVER_DEFAULT_MOUNT_ID= |

com.knime.enterprise.executor.embedded-broker= [ST]Enables the use of the embedded message queue (Apache Qpid) instead of a separate RabbitMQ installation. This allows you to run distributed KNIME Executors on the same system as the KNIME Server. By default this is enabled. |

com.knime.enterprise.executor.embedded-broker.port= [ST]Allows to configure the port for the embedded message queue (see option above). The default is 5672 and you should only change it if the port is already in use by another service. You also need to adjust the message broker address in the Executor’s knime.ini in this case. |

com.knime.enterprise.executor.msgq=amqp://:@/ [DE][ST]URL to the RabbitMQ virtual host. In case RabbitMQ High Available Queues (opens new window) are used, simply add additional : separated by commas to the initial amqp address: com.knime.enterprise.executor.msgq=amqp://:@rabbitmq-host/knime-server,amqp://:,amqp://: Note, this is supported with KNIME Server 4.11.3 onward. Env: KNIME_EXECUTOR_MSGQ=amqp://:@/ |

com.knime.enterprise.executor.msgq.connection_retries= [DE][ST]Defines the maximum number of connection retries for the message queue, that should be performed during server startup. The delay between retries is 10 seconds. The default is 9, `` has to be an integer value greater or equal to 1. For value less than 0 the number of retries is infinite. Env: KNIME_MSGQ_CONNECTION_RETRIES= |

com.knime.enterprise.executor.msgq.names=,,… [DE][ST]Defines the names of Executor Groups. The number of names must match the number of rules defined with com.knime.enterprise.executor.msgq.rules. See executor groups (opens new window) for more information. |

com.knime.enterprise.executor.msgq.rules=,,… [DE][ST]Defines the exclusivity rules of the Executor Groups. The number of rules must match the number of rules defined with com.knime.enterprise.executor.msgq.names. See executor groups (opens new window) for more information. |

com.knime.server.executor.blacklisted_nodes=,,… [RT]Specifies nodes that are blacklisted by the server, i.e. which aren’t allowed to be executed. For blacklisting a node you have to provide its factory name. Wildcards (*) are supported. For more information see here (opens new window). |

com.knime.server.executor.knime_exe= [RE][RT]Specifies the KNIME executable that is used to execute flows on the server. Default is none (no execution available on the server). This option is not used when using the default queue-based execution mode. |

com.knime.server.executor.prestart= [RE][ST]Specifies whether an Executor should be started during server startup or if it should be started on-demand when the first workflow is being executed. Default is to prestart the Executor. |

com.knime.server.executor.reject_future_workflows= [RT]Specifies whether the Executor should reject loading workflows that have been create with future versions. For new installations the value is set to true. If no value is specified the Executor will always try to load and execute any workflow by default. |

com.knime.server.executor.start_port= [RE][ST]Specifies the start port that the server uses to communicate with the KNIME Executor. Default is 60100. With multiple Executors and/or automatic Executor renewal multiple consecutive ports are used. |

com.knime.server.executor.update_metanodelinks_on_load= [RT]Specifies whether component links in workflows should be updated right after the workflow has been loaded in the KNIME Executor. Default is not to update component links. |

com.knime.server.job.async_load_reconnect_timeout= [DE][RT]Specifies the default connection timeout of asynchronously loaded jobs in case of a server restart. If a server restart occurs the server tries to reconnect to jobs that have been loaded asynchronously, as they might be still in the message queue or discarded due to an error. For this the maximum of the remaining load timeout or the async_load_reconnect_timeout is used to wait for status updates. If the time elapses without a status update loading will be canceled and the job state will be set to LOAD_ERROR. |

com.knime.server.job.default_cpu_requirement= [RT]Specifies the default CPU requirement in number of cores of jobs without a specific requirement set. See CPU and RAM requirements (opens new window) for more information. The default is 0. |

com.knime.server.job.default_load_timeout= [RT]Specifies how long to wait for a job to get loaded by an Executor. If the job does not get loaded within the timeout, the operation is canceled. The default is 1m. This timeout is only applied if no explicit timeout has been passed with the call. |

com.knime.server.job.default_ram_requirement= [RT]Specifies the default RAM requirement of jobs without a specific requirement set. See CPU and RAM requirements (opens new window) for more information. In case no unit is provided it is automatically assumed to be provided in megabytes. The default is 0MB. |

com.knime.server.job.default_report_timeout= [RT]Specifies how long to wait for a report to be created by an Executor. If the report is not created within the timeout, the operation is canceled. The default is 1m. This timeout is only applied if no explicit timeout has been passed with the call. |

com.knime.server.job.default_swap_timeout= [RT]Specifies how long to wait for a job to be swapped to disk. If the job is not swapped within the timeout, the operation is canceled. The default is 1m. This timeout is only applied if no explicit timeout has been passed with the call (e.g. during server shutdown). |

com.knime.server.job.discard_after_timeout= [RT]Specifies whether jobs that exceeded the maximum execution time should be canceled and discarded (true) or only canceled (false). May be used in conjunction with com.knime.server.job.max_execution_time option. The default (true) is to discard those jobs. |

com.knime.server.job.exclude_data_on_save= [DE][RT]Specifies whether node outputs of jobs that are saved as workflows shall be excluded. If this is set to true the resulting workflows will be reset, i.e. no output data are available at the nodes. The default value is false. |

com.knime.server.job.max_execution_time= [RT]Allows to set a maximum execution time for jobs. If a job is executing longer than this value it will be canceled and eventually discarded (see com.knime.server.job.discard_after_timeout option). The default is unlimited job execution time. Note that for this setting to work, com.knime.server.job.swap_check_interval needs to be set a value lower than com.knime.server.job.max_execution_time. |

com.knime.server.job.max_lifetime= [RT]Specifies the time of inactivity, before a job gets discarded (defaults to 7d), negative numbers disable forced auto-discard. |

com.knime.server.job.max_time_in_memory= [RT]Specifies the time of inactivity before a job gets swapped out from the Executor (defaults to 60m), negative numbers disable swapping. |

com.knime.server.job.status_update_interval= [RE][RT]Specifies the interval at which the running Executor instances are checked for unnoticed status changes and if they are still alive. Default is every 60s. |

com.knime.server.job.swap_check_interval= [RT]Specifies the interval at which the server will check for inactive jobs that can be swapped to disk. Default is every 1m. |

com.knime.server.login.allowed_groups =,,… [RT]Defines the groups that are allowed to log in to the server. Default value allows users from all groups. Env: KNIME_LOGIN_ALLOWED_GROUPS=,,… |

com.knime.server.login.consumer.allowed_accounts =,,… [RT]Defines account names that are allowed to log in to the server as consumer. Default value allows login as consumer for all users. Env: KNIME_CONSUMER_ALLOWED_ACCOUNTS=,,… |

com.knime.server.login.consumer.allowed_groups =,,… [RT]Defines the groups that are allowed to log in to the server as consumer. Default value allows login as consumer from all groups. Env: KNIME_CONSUMER_ALLOWED_GROUPS=,,… |

com.knime.server.login.jwt-lifetime= [RT]Defines the maximum lifetime of JSON Web Tokens issued by the server. The default value is 30d. A negative value allows unrestricted tokens (use this value with care because there is no way to revoke issued tokens). |

com.knime.server.login.user.allowed_accounts =,,… [RT]Defines account names that are allowed to log in to the server as user. Default value allows login as user for all users. |

com.knime.server.login.user.allowed_groups =,,… [RT]Defines the groups that are allowed to log in to the server as a user. Default value allows login as user from all groups. |

com.knime.server.report_formats= [RT]Defines the different formats available for report generation as a comma separated list of values. Possible values are html, pdf, doc, docx, xls, xlsx, ppt, pptx, ps, odp, odt and ods. If this value is empty or not set the default list of formats is html, pdf, docx, xlsx and pptx. |

com.knime.server.repository.update_recommendations_at= [RT]Defines a time during the day (in ISO format, i.e. 24h notation, e.g. 21:15) at which the node recommendations for the workflow coach are updated based on the current workflow repository contents. Default is undefined which means that no node recommendations will be computed and provided by the server. |

com.knime.server.server_admin_groups=,,… [RT]Specifies the admin group(s). Users belonging to at least one of these groups are considered KNIME Server admins (not Tomcat server admins). Default is no admin groups. Env: KNIME_SERVER_ADMIN_GROUPS=,,… |

com.knime.server.server_admin_users=,,… [RT]Specifies the user(s) that are KNIME Server admins (not Tomcat admins). Default is no users. |

com.knime.server.user_directories.directory_location= [ST]Specifies the base directory in which user directories shall be created on first login. All non existing directories of `` will be created and their owner set to the defined owner (com.knime.server.user_directories.parent_directory_owner). The permissions of the created directories are: owner: rwx, world: r--. If left empty no user directories will be created and all com.knime.server.user_directories options will be ignored. Note that only logins via the Analytics Platform will cause a user directory to be created. |

com.knime.server.user_directories.parent_directory_owner= [ST]Specifies the owner of the created directories of com.knime.server.user_directories.directory_location. If left empty the default value knimeadmin will be used. |

com.knime.server.user_directories.owner_permissions= [ST]Specifies the permissions of the owners (users themselves) for their created user directories. The defined permissions have to be in a block of 3 characters (r,w,x,-), e.g. rwx or r-x. If left empty the default value rwx is used. |

com.knime.server.user_directories.inherit_permissions= [ST]Specifies if the permissions of the created user directories shall be inherited from their parent directory. If left empty the default value false is used. |

com.knime.server.user_directories.groups=:,:,… [ST]Specifies the permissions of groups for the created user directories. The defined permissions have to be in a block of 3 characters (r,w,x,-), e.g. rwx or r-x. If left empty no group permissions are set. |

com.knime.server.user_directories.users=:,:,… [ST]Specifies the permissions of users for the created user directories. The defined permissions have to be in a block of 3 characters (r,w,x,-), e.g. rwx or r-x. If left empty no user permissions are set. |

com.knime.server.user_directories.world_permissions= [ST]Specifies the permissions of others for the created user directories. The defined permissions have to be in a block of 3 characters (r,w,x,-), e.g. rwx or r-x. If left empty the default value r-- is used. |

com.knime.server.action.upload.force_reset= [RT]Specfifies if all workflows shall be reset before being uploaded. This only works for workflows that are uploaded in the KNIME Analytics Platform 4.2 or higher. If left empty the default value false is used. The user can only change the reset behavior manually if /instance/org.knime.workbench.explorer.view/action.upload.enable_reset_checkbox is set to true, otherwise the behavior cannot be changed by the user. |

com.knime.server.action.upload.enable_reset_checkbox= [RT]If set to true together with com.knime.server.action.upload.force_reset the user has the option to change the reset behavior in the Deploy to Server dialog. This only works for workflows that are uploaded in the KNIME Analytics Platform 4.2 or higher. If left empty the default value false is used. |

com.knime.server.action.snapshot.force_creation= [RT]Specifies if a snapshot shall always be created when overwriting a workflow or file. This only works when overwriting workflows or files in the KNIME Analytics Platform 4.2 or higher. If left empty the default value false is used. |

In KNIME Analytics Platform, these options are supported by KNIME Server: add them to the knime.ini file, after the -vmargs line, each in a separate line.

-Dcom.knime.server.server_address=Sets the `` as the default Workflow Server in the client view.

# Default mount ID

KNIME supports mountpoint relative URLs using the knime protocol (see the KNIME Explorer User Guide (opens new window) for more details). Using this feature with KNIME Server requires both the workflow author and their collaborator to use the shared Mount IDs. With this in mind, you can now set a common name (Mount ID) for the server to all users.

The default name for your server can be specified in the configuration file:

com.knime.server.default_mount_id=<server name>

Please note that a valid Mount ID contains only characters a-Z, A-Z, '.' or '-'. It must start with a character and not end with a dot nor hyphen. Additionally, Mount IDs starting with knime. are reserved for internal use. | |

|---|---|

# Blacklisting nodes

You might want to prevent the usage of certain nodes on the Executor of KNIME Server. While you can decide, which extensions you install for the Executor there might be nodes in the basic installation of KNIME Analytics Platform or in a required extension that shouldn’t be used.

The configuration option

com.knime.server.executor.blacklisted_nodes=<node>,<node>,...

allows you to define a list of nodes that should be blocked by the Executor. This list also supports wildcards (*). If a workflow contains a blacklisted node the Executor will throw an error and abort loading the workflow.

To blacklist a node you have to provide the full name of the node factory. The easiest way to determine the factory names of the nodes you want to block is to create a workflow with all nodes that should be blacklisted. After saving the workflow you are able to access the settings.xml of each node under ///settings.xml. The factory name can be found in the entry with key "factory".

The following shows an example on how to block the Java Snippet nodes. The factory information for the Java Snippet node is

<entry key="factory" type="xstring" value="org.knime.base.node.jsnippet.JavaSnippetNodeFactory"/>

To block the Java Snippet node we simply provide the value (without the quotes)

com.knime.server.executor.blacklisted_nodes=org.knime.base.node.jsnippet.JavaSnippetNodeFactory

The factory names for Java Snippet (simple), Java Snippet Row Splitter, and Java Snippet Row Filter are

org.knime.ext.sun.nodes.script.JavaScriptingNodeFactory

org.knime.ext.sun.nodes.script.node.rowsplitter.JavaRowSplitterNodeFactory

org.knime.ext.sun.nodes.script.node.rowfilter.JavaRowFilterNodeFactory

Since they all share the same prefix, we append n factory name making use of wildcards:

com.knime.server.executor.blacklisted_nodes=org.knime.base.node.jsnippet.JavaSnippetNodeFactory,org.knime.ext.sun.nodes.script.*Java*

While users are still able to upload workflows containing these nodes, the Executor won’t load a workflow containing any of them.

# KNIME executor job handling

# Job swapping

Jobs that are inactive for a period of time may be swapped to disc and removed from the Executor to free memory or Executor instances. A job is inactive if it is either fully executed or waiting for user input (on the KNIME WebPortal). If needed, it will be retrieved from disk automatically.

The configuration option

com.knime.server.job.max_time_in_memory=<duration with unit, e.g. 60m, 36h, or 2d>

controls the period of inactivity allowed before a job will be swapped to disk (default = 60m). If you specify a negative number this feature is disabled and inactive jobs stay in memory until they are discarded.

| There are certain flows that will not be restored in the exact same state that it was in, before it got swapped out. For example, if a flow gets swapped with a loop partially executed, this loop iteration will be reset and the loop execution is restarted. | |

|---|---|

# Job auto-discard

There is an additional threshold for inactivity of a job after which it may be discarded automatically. A discarded job due to inactivity cannot be recovered. The time threshold for a job to be automatically discarded is controlled by setting

com.knime.server.job.max_lifetime=<duration with unit, e.g. 60m, 36h, or 2d>

The default value (if the option is not set) is 7d.

# Managing User and Consumer Access

It is possible to restrict which groups (or which individual users) are eligible to log in as either users or consumers. In this context, a user is someone who logs in from a KNIME Analytics Platform client to e.g. upload workflows, set schedules, or adjust permissions. On the other hand, a consumer is someone who can only execute workflows from either the KNIME WebPortal or via the KNIME Server REST API.

In order to control who is allowed to log in as either user or consumer, the following settings need to be adjusted in the knime-server.config:

com.knime.server.login.allowed_groups: This setting has to include all groups that should be allowed to login to KNIME Server, regardless of whether they are users or consumers.

com.knime.server.login.consumer.allowed_groups: List of groups that should be allowed to use the WebPortal or REST API to execute workflows.

com.knime.server.login.user.allowed_groups: List of groups that should be allowed to connect to KNIME Server from a KNIME Analytics Platform client.

# Usage Example

com.knime.server.login.allowed_groups`=`marketing,research,anaylsts

com.knime.server.login.consumer.allowed_groups`=`marketing,research,anaylsts

com.knime.server.login.user.allowed_groups`=`research

In the above example, we first restrict general access to KNIME Server to individuals in the groups marketing, research, and analysts. All individuals who are not in any of these groups won’t be able to access KNIME Server at all. Next, we allow all three groups to login as consumers via WebPortal or REST API. Finally, we define that only individuals in the group research should be able to log in as users from a KNIME Analytics Platform client.

| By default, these settings are left empty, meaning that as long as users are generally able to login to your KNIME Server (e.g. because they are in the allowed AD groups within your organization), they can log in as either users or consumers. Since the number of available user licenses is typically lower than the number of consumers, it is recommended to restrict user access following the above example. | |

|---|---|

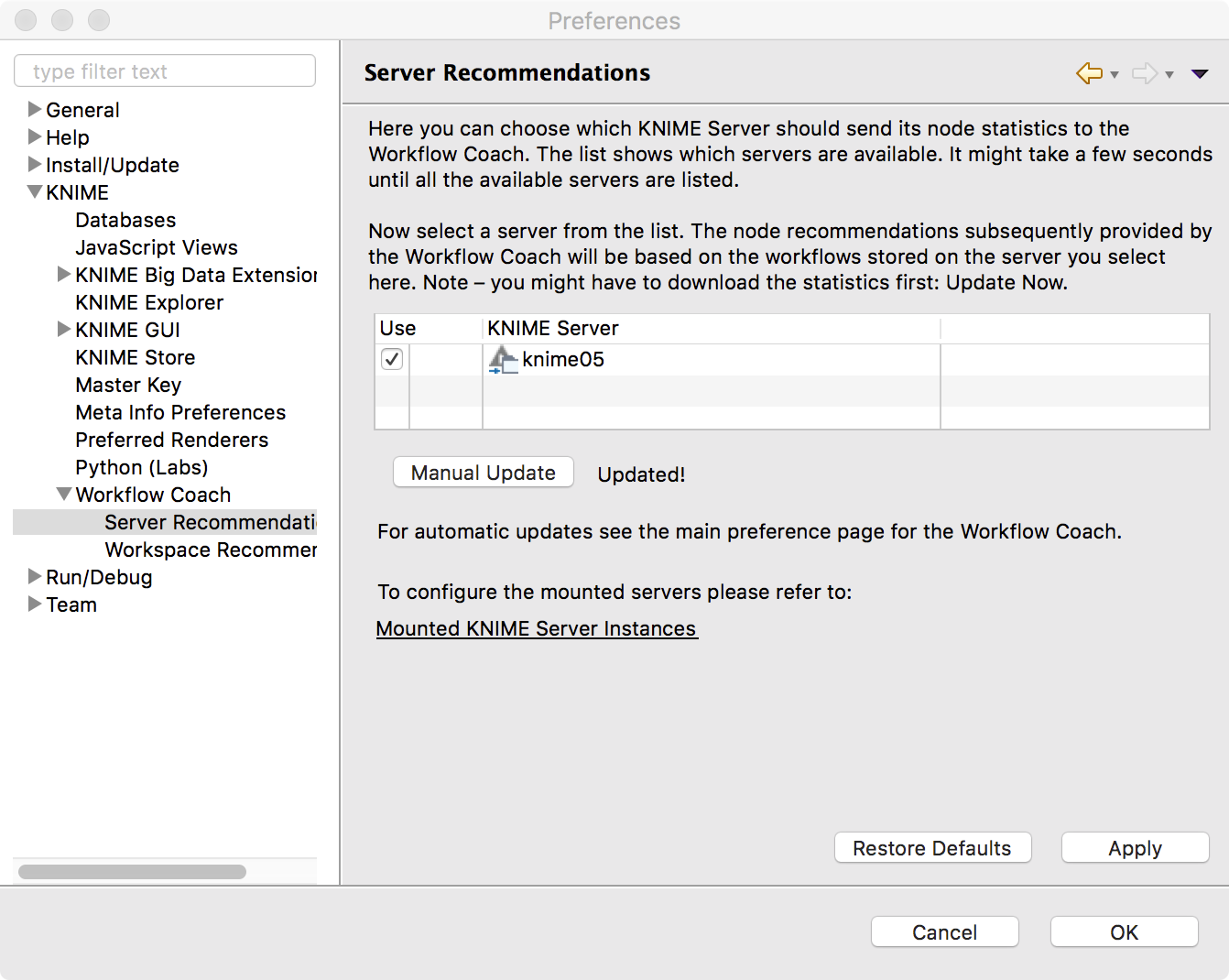

# Executor Preferences

If the KNIME Executor requires certain preferences (e.g. database drivers or path to Python environment), you need to provide a preference files that the Executor(s) can retrieve from the application server.

To get a template of the preferences:

- Start KNIME (with an arbitrary workspace).

- Set all preferences via "File" → "Preferences") and export the preferences via "File" → "Export Preferences". This step can also be performed on a client computer but make sure that any paths you set in the preferences are also valid on the server.

Open the exported preferences and insert the relevant lines into /config/client-profiles/executor.epf

Note: Make sure to specify the paths of all database drivers in the new preference page, in order to be able to execute workflows with database nodes. The page is available in the KNIME → Database Drivers category of the preferences.

| It is recommended to only copy over the settings you will actually use on the Executor, like database drivers or Python preferences. The full preferences export is likely to contain e.g. host-specific paths that are not valid on the target system. | |

|---|---|

We have bundled a file called executor.epf into the /config/client-profiles/executor folder. In order for those preferences to be used, you must edit the knime.ini file of the executor and insert

-profileLocation

http://127.0.0.1:8080/<WebPortal Context ROOT, most likely "knime">/rest/v4/profiles/contents

-profileList

executor

before the line containing -vmargs. This only has to be done in case no Executor has been provided during the installation of KNIME Server, otherwise it is set automatically.

# Adding Executor preferences for headless Executors

In order to be able to execute workflows that contain database nodes that use custom or proprietary JDBC driver files on KNIME Server, the executor.epf file must contain the path to the JDBC jar file, or the folder containing the JDBC driver. This may be specified in the KNIME Analytics Platform (Executor) GUI and the executor.epf file exported as described in the above section. This is the recommended route for systems that have graphical access to the KNIME Analytics Platform (Executor).

Some systems do not have graphical access to the KNIME Analytics Platform (Executor) GUI. In that case the executor.epf can be manually created, or created on an external machine and copied into location on the server. The relevant lines that must be contained in the executor.epf file are:

file_export_version=3.0

\!/=

/instance/org.knime.workbench.core/database_drivers=/path/to/driver.jar;/path/to/driver-folder

/instance/org.knime.workbench.core/database_timeout=60

Note that driver.jar may also reference a folder in some cases (e.g. MS SQL Server and Simba Hive drivers).

| If you are using distributed KNIME Executors, please see the Server-managed Customization Profiles (opens new window) section of the KNIME Database Extension Guide (opens new window) for how to distribute JDBC drivers. | |

|---|---|

# knime.ini file

You might want to tweak certain settings of this KNIME instance, e.g. the amount of available memory or set system properties that are required by some extensions. This can be changed directly in the knime.ini in the KNIME Executor installation folder.

KNIME Server will read the knime.ini file next to the KNIME executable and create a custom ini file for every Executor that is started. However, if you use a shell script that prepares an environment the server may not be able to find the ini file if this start script is in a different folder. In this case the knime.ini file must be copied to /config/knime.ini. If this file exists, the server will read it instead of searching for a knime.ini next to the executable or start script.

# Log files

There are several log files that could be inspected in case of unexpected behavior:

# Tomcat server log

Location: /logs/catalina.yyyy-mm-dd.log

This file contains all general Tomcat server messages, such as startup and shutdown. If Tomcat does not start or the KNIME Server application cannot be deployed, you should first look into this file.

Location: /logs/localhost.yyyy-mm-dd.log

This file contains all messages related to the KNIME Server operation. It does not include messages from the KNIME Executor!

For new installations these files are kept for 90 days before being removed. The default behaviour can be changed by editing the /conf/logging.properties file and amending any entries with:

1catalina.org.apache.juli.FileHandler.maxDays = 90

# KNIME executor log

Location: /.metadata/knime/knime.log

The executor-workspace is usually in the home directory of the operating system user that runs the executor process and is called knime-workspace. If you provided a custom workspace using the -data argument when starting the executor you can find it there.

If you are still using deprecated RMI executors, the executor-workspace is /runtime/runtime_knime-rmi_.

This file contains messages from the KNIME Executor that is used to execute workflows on the server (for manually triggered execution, scheduled jobs, and also for generated reports, if KNIME Report Server is installed)

The executor’s log file rotates every 10MB by default. If you want to increase the log file size (to 100MB for example), you have to append the following line at the end of the executor’s knime.ini:

-Dknime.logfile.maxsize=100m

Also useful in some cases is the Eclipse log file /.metadata/.log

# KNIME Analytics Platform (client) log

Location: /.metadata/knime/knime.log

This file contains messages of the client KNIME application. Messages occurring during server communications are logged there. The Eclipse log of this application is in /.metadata/.log

# Email notification

KNIME Server allows users to be notified by email when a workflow finishes executing. The emails are sent from a single email address which can be configured as part of the web application’s mail configuration. If you don’t want to enable the email notification feature, no email account is required. You can always change the configuration and enter the account details later.

# Setting up the server’s email resource

The email configuration is defined in the web application context configuration file which is /conf/Catalina/localhost/knime.xml (or com.knime.enterprise.server.xml or similar). The installer has already created this file. In order to change the email configuration, you have to modify or add attributes of/to the `` element. All configuration settings must be added as attributes to this element. The table below shows the list of supported parameters (see also the JavaMail API documentation (opens new window)). Note that the mail resource’s name must be mail/knime and cannot be changed.

| Name | Value |

|---|---|

mail.from | Address from which all mails are sent |

mail.smtp.host | SMTP server, required |

mail.smtp.port | SMTP port, default 25 |

mail.smtp.auth | Set to true if the mail server requires authentication; optional |

mail.smtp.user | Username for SMTP authentication; optional |

password | Password for SMTP authentication; optional |

mail.smtp.starttls.enable | If true, enables the use of the STARTTLS command (if supported by the server) to switch the connection to a TLS-protected connection before issuing any login commands. Defaults to false. |

mail.smtp.ssl.enable | If set to true, use SSL to connect and use the SSL port by default. Defaults to false. |

If you do not intend to use the email notification service (available in the KNIME WebPortal (opens new window) for finished workflow jobs), you can skip this step.

Note that the mail configuration file contains the password in plain text. Therefore, you should make sure that the file has restrictive permissions.

# User authentication

As described briefly in the Server architecture (opens new window) section it is possible to use any of the authentication methods available to Tomcat in order to manage user authentication. By default the KNIME Server installer configures a database (H2) based authentication method. Using this method it is possible for admin users to add/remove users/groups via the AdminPortal using a web-browser. Other users may change their password using this technique.

For enterprise applications, use of LDAP authentication is recommended, and user/group management is handled in Active Directory/LDAP itself.

In all cases the relevant configuration information is contained in the

`<Realm className="org.apache.catalina.realm.LockOutRealm">`

tag in /conf/server.xml.

The default configuration uses a CombinedRealm which allows multiple authentication methods to be used together. Examples for each of database, file and LDAP authentication are contained within the default installation. Configuration of all three authentication methods are described briefly in the following sections. In all cases the Tomcat documentation (opens new window) should be considered the authoritative information source.

# LDAP authentication

LDAP authentication is the recommended authentication in any case where an LDAP server is available. If you are familiar with your LDAP configuration you can add the details during installation time, or edit the server.xml file post installation. If you are unfamiliar with your LDAP settings, you may need to contact your LDAP administrator, or use the configuration details for any other Tomcat based system in your organization. Please refer to the KNIME Server Advanced Setup Guide (opens new window) for details on setting up LDAP.

# Connecting to an SSL secured LDAP server

In case you are using encrypted LDAP authentication and your LDAP server is using a self-signed certificate, Tomcat will refuse it. In this case you need to add the LDAP server’s certificate to the global Java keystore, which is located in /lib/security/cacerts:

keytool -import -v -noprompt -trustcacerts -file \

<server certificate> -keystore <jre>/lib/security/cacerts \

-storepass changeit

Alternatively you can copy the cacerts file, add your server certificate, and add the following two system properties to /conf/catalina.properties:

javax.net.ssl.trustStrore=<copied keystore>

javax.net.ssl.keyStorePassword=changeit

# Single-sign-on with LDAP and Kerberos

It is possible to use Kerberos in combination with LDAP for Single-Sign-On for authentication with KNIME Server.

This is an advanced topic and is covered in the KNIME Server Advanced Setup Guide (opens new window).

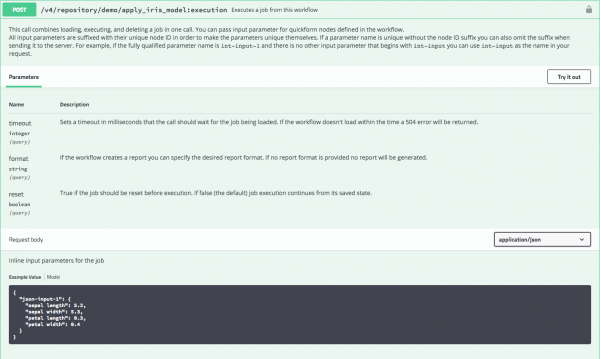

# Token-based authentication

KNIME Server also allows authentication by JWT (JSON Web Tokens) that have previously been issued by the server. The REST endpoint /rest/auth/jwt can be used to acquire such a JWT for the currently logged in user. Subsequent requests need to carry the token in the Authorization header as follows:

Authorization: Bearer xxx.yyy.zzz

where xxx.yyy.zzz is the JWT. Token-based authentication is enabled by default and cannot be disabled. However, you can restrict the maximum lifetime of JWTs issued by the server via the server configuration option com.knime.server.login.jwt-lifetime, see section KNIME Server configuration file options (opens new window).

The OpenAPI documentation for the REST API which can be found at: https:///knime/rest/doc/index.html#/Session should be considered the definitive documentation for this feature.

# Large number of users in a group

Since the JWT includes the group membership for the user, this can get very large in some cases. JWTs with more than 30 groups and that are larger than 2kB are now compressed. If they are still larger than 7kB a warning is logged with hints how to resolve potential problems.

One solution is to increase the maximum HTTP header size in Tomcat by adding the attribute maxHttpHeaderSize="32768" to all defined Connectors in the server.xml (the default is 8kB). In case Tomcat is running behind a proxy, the limit may need to be increased there, too. In case of Apache it’s the global setting LimitRequestFieldSize 32768.

# Database-based authentication

Database-based authentication is recommended to be used by small workgroups who do not have access to an LDAP system, or larger organisations in the process of trialing KNIME Server. If using the previously described H2 database it is possible to use the AdminPortal to manage users and groups. It is possible to use other SQL databases e.g. PostgreSQL to store user/group information, although in this case it is not possible to use the AdminPortal to manage users/groups, management must be done in the database directly.

For default installations this authentication method is enabled within the server.xml file. No configuration changes are required. In order to add/remove users, or create/remove groups the administration pages of the WebPortal can be used. The administration pages can be located by logging into the WebPortal as the admin user, see section Administration pages (opens new window) on the KNIME WebPortal Administration Guide for more details.

Batch insert/update of usernames and roles is possible using the admin functionality of the KNIME Server REST API. This is described in more detail in the section RESTful webservice interface (opens new window). A KNIME Workflow is available in the distributed KNIME Server installation package that can perform this functionality.

# File-based authentication

For KNIME Server versions 4.3 or older the default configuration used a file-based authentication which we describe for legacy purposes. It is now recommended to use either database-based or LDAP authentication. The advantages of each are described in the corresponding sections above and below.

The XML file /conf/tomcat-users.xml contains examples on how to define users and groups (roles). Edit this file and follow the descriptions. By default this user configuration file contains the passwords in plain text. Encrypted storage of passwords is described in the Tomcat documentation.

# Configuring a license server

Since version 4.3 KNIME Server can distribute licenses for extensions to the KNIME Analytics Platform (e.g. Personal Productivity, TeamSpace, or Big Data Connectors) to clients. In order to use the license server functionality, you require a master license. Every KNIME Server Large automatically comes with TeamSpace client licenses for the same number of users as the server itself.

The master license file(s) should be copied into the licenses folder of the server repository (next to the server’s license). The server will automatically pick up the license and offer them to clients. For configuring the client, see the section about "Retrieving client licenses" in the KNIME Explorer User Guide (opens new window).

Client licenses distributed by the server are stored locally on the client and are tied to the user’s operating system name (not the server login!) and its KNIME Analytics Platform installation and/or the computer. They are valid for five days by default which means that the respective extensions can be used for a limited time even if the user doesn’t have access to the license server.

If the user limit for a license has been reached, no further licenses will be issued to clients until at least one of the issued licenses expires. The administrator will also get a notification email in this case (if their email notification is configured, see previous section Email notification (opens new window)).

# License renewal

If the server is not behaving as expected due to license issues, please contact KNIME by sending an email to support@knime.com or to your dedicated KNIME support specialist.

If the license file is missing or is invalid a message is logged to the server’s log file during server start up. KNIME clients are not able to connect to the server without a valid server license. Login fails with a message "No license for server found".

If the KNIME Server license has expired connecting clients fail with the message "License for enterprise server has expired on …". Please contact KNIME to renew your license.

If more users than are licensed attempt to login to the WebPortal, some users will see the message: "Maximum number of WebPortal users exceeded. The current server license allow at most

After you receive a new license file, remove the old expired license from the /licenses folder. In case there are multiple license files in this folder, find the one containing a line with

"name" = "KNIME Server"

and the "expiration date" set to a date in the past. The license file is a plain text file and can be read in any text editor.

Store the new license file in the license folder with the same owner and the same permissions as the old file.

The new license is applied immediately; a server restart is not necessary.

# Backup and recovery

The following files and/or directories need to be backed up:

- The full server repository folder, except the

tempfolder - The full Tomcat folder

- In case you installed your own molecule sketcher for the KNIME WebPortal (see above), also backup this folder.

A backup can be performed while the server is running but it’s not guaranteed that a consistent state will be copied as jobs and the workflow repository may change while you are copying files.

In order to restore a backup copy the files and directories back to their original places and restart the server. You may also restore to different location but make sure to adjust the paths in the start script, the repository location in the context configuration file, and paths in the server configuration.

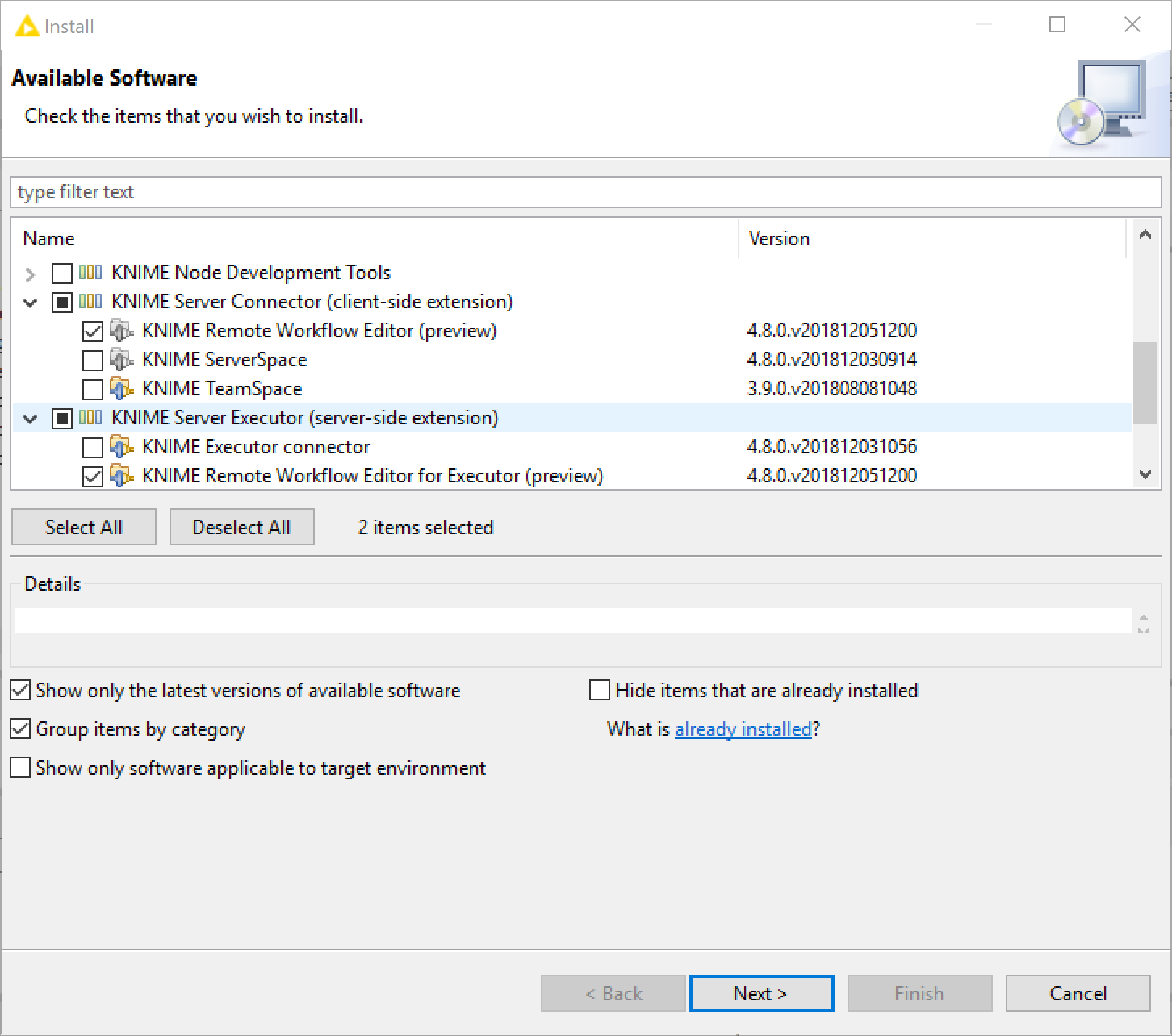

# KNIME Executor installation

Install the open-source KNIME Analytics Platform 4.2 on the server. Install all additional extensions users may need to run their workflows on the server. Make sure to include the "KNIME Report Designer" extension. Also install all extensions listed in the "KNIME Server Executor" category, either from the default online update site that or from the update site archive that you can get from the download area there. Note that the versions of the KNIME Server Executor extensions must match the server’s version (e.g. "4.11")! Therefore, please check that you are installing from these extensions from correct update sites if you are not using the latest released versions of both the server and Executor.

| The easiest way to achieve this is to download the KNIME Executor full build from here (opens new window) and extract it. It includes all extensions required for running as an Executor for a KNIME Server. | |

|---|---|

Make sure that users other than the installation owner either have no write permissions to the installation folder at all or that they have full write permission to at least the "configuration" folder. Otherwise you may run into strange startup issues. We strongly recommend revoking all write permissions from everybody but the installation owner.

If the server does not have internet access, you can download zipped update sites (from the commercial downloads page) which contain the extensions that you want to install. Go to the KNIME preferences at File → Preferences → Install/Update → Available Software Sites and add the zip files as "Archives". In addition you need to disable all online update sites on the same page, otherwise the installation will fail. Now you can install the required extensions via File → Install KNIME Extensions….

# Installing additional extensions

The easiest way to install additional extensions into the Executor (e.g. Community Extensions or commercial 3rd party extensions) is to start the Executor in GUI mode and install the extensions as usual. In case you don’t have graphical access to the server you can also install additional extensions without a GUI. The standard knime executable can be started with a different application that allows changing the installation itself:

./knime -application org.eclipse.equinox.p2.director -nosplash

-consolelog -r _<list-of-update-sites>_ -i _<list-of-features>_ -d _<knime-installation-folder>_

Adjust the following parameters to your needs:

``: a comma-separated list of remote or local update sites to use. ZIP files require a special syntax (note the single quotes around the argument). Example:

-r 'http://update.knime.org/analytics-platform/4.2,jar:file:/tmp/org.knime.update.analytics-platform_4.2.0.zip!/'Some extensions, particularly from community update sites, have dependencies to other update sites. In those cases, it it necessary to list all relevant update sites in the installation command. - Adding the following four update sites should cover the vast majority of cases:

- http://update.knime.com/analytics-platform/4.2

- http://update.knime.com/community-contributions/4.2

- http://update.knime.com/community-contributions/trusted/4.2

- http://update.knime.com/partner/4.2

- Adding the following four update sites should cover the vast majority of cases:

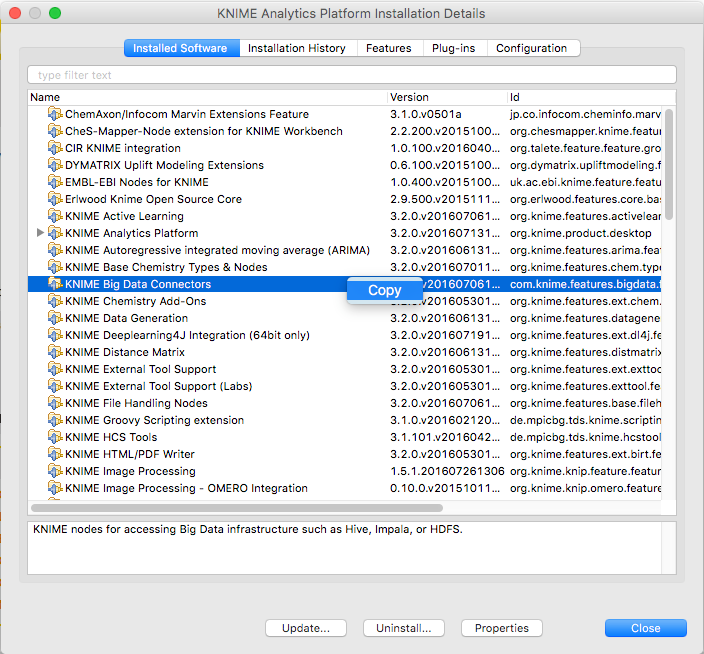

``: a comma-separated list (spaces after commas are not supported) of features/extensions that should be installed. You can get the necessary identifiers by looking at Help → About KNIME → Installation Details → Installed Software in a KNIME instance that has the desired features installed. Take the identifiers from the "Id" column and make sure you don’t omit the

.feature.groupat the end (see also screenshot on the next page). Example:-i org.knime.product.desktop,org.knime.features.r.feature.groupYou can get a list of all installed features with:

./knime -application org.eclipse.equinox.p2.director -nosplash \ -consolelog -lir -d _<knime-installation-folder_``: the folder into which KNIME Analytics Platform should be installed (or where it is already installed). Example:

-d /opt/knime/knime_4.2

# Updating the Executor

Update of an existing installation can be performed by using the update-executor.sh script in the root of the installation. You only have to provide a list of update sites that contain the new versions of the installed extensions and all installed extension will be updated (given that an update is available):

./update-executor.sh http://update.knime.com/analytics-platform/4.2

If you want to selectively update only certain extensions, you have to build the update command yourself. An update is performed by uninstalling (-u) and installing (-i) an extension at the same time:

./knime -application org.eclipse.equinox.p2.director -nosplash -consolelog -r <list-of-update-sites> -i

<list-of-features> -u <list-of-features> -d <knime-installation-folder>

例如,要更新大数据扩展,请运行以下命令:

./knime -application org.eclipse.equinox.p2.director -nosplash \

-consolelog -r http://update.knime.com/analytics-platform/4.2 -i

org.knime.features.bigdata.connectors.feature.group,org.knime.features.bigdata.spark.feature.group

-u org.knime.features.bigdata.feature.group,org.knime.features.bigdata.spark.feature.group -d $ PWD

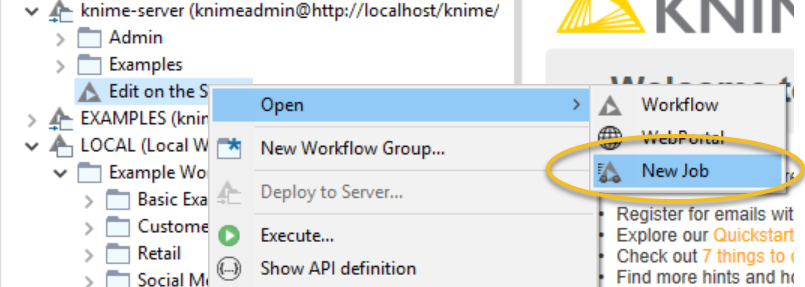

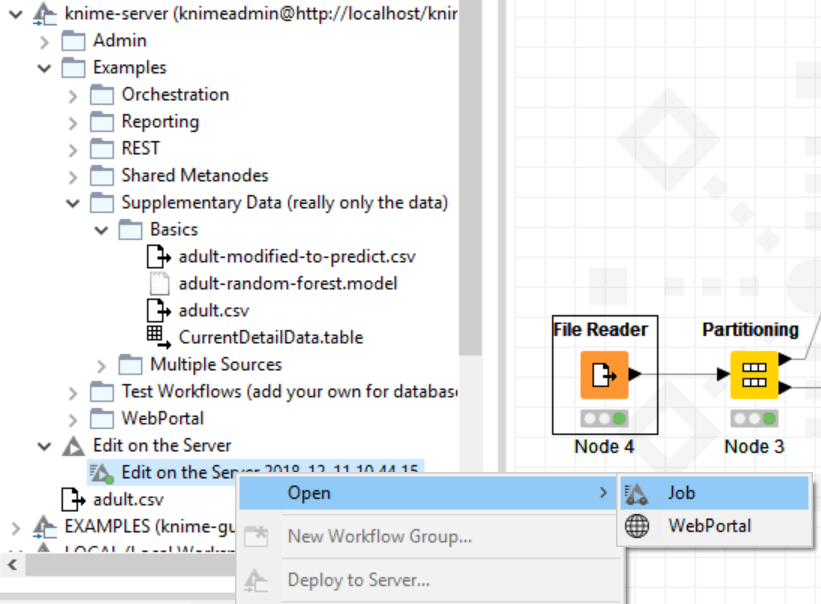

# 启用工作流程执行

安装了带有所有必需扩展程序的KNIME Executor后,您必须告诉服务器在哪里可以找到Executor。将com.knime.server.executor.knime_exe服务器配置中的值设置 为knime 可执行文件。该路径可以是绝对路径,也可以是相对于服务器配置文件夹(/config)的路径。可以在服务器运行时更改执行器的路径,该路径将在启动新执行器时使用(例如,在加载第一个工作流时)。

对于Windows用户:对于服务器配置文件中的路径,请使用正斜杠(“ /”)或双反斜杠(“ \”)。单个反斜杠用于转义字符。

有时,在执行器中运行的工作流程作业想要访问服务器上的文件,例如,通过相对于工作流程的URL或使用服务器的安装点ID的URL。由于执行器无法使用用户密码对服务器进行身份验证(因为服务器和执行器通常都不知道),因此在启动(或计划)工作流程时,服务器会生成令牌。该令牌表示用户,包括其创建时的组成员身份 。如果在工作流作业仍在运行或有进一步的计划执行期间更改组成员身份,这些更改将不会反映在工作流执行中。同样,如果已经完全撤消了用户的访问权限,则现有(计划的)作业仍可以访问服务器存储库。

如果Executor在与服务器不同的计算机上运行,请注意以下几点:服务器和Executor之间的通信部分通过REST接口执行,例如,当工作流程从服务器存储库请求文件时。因此,执行程序必须知道服务器的地址。服务器尝试自动检测其地址,并将其发送给执行程序。但是,如果服务器在代理服务器(例如Apache)后面运行,或具有与内部服务器不同的外部IP地址,则自动检测将提供错误的地址,执行器将无法访问服务器。在这种情况下,您必须将配置选项 com.knime.server.canonical-address设置为服务器的规范地址,例如 http://knime-server.behind.proxy/(您无需提供服务器应用程序的路径)。执行者必须可以使用该地址。

# KNIME Executors

# Distributed KNIME Executors: Introduction

As part of a highly available architecture, KNIME Server 4.11 allows you to distribute execution of workflows over several Executors that can sit on separate hardware resources. This allows KNIME Server to scale workflow execution with increasing load because it is no longer bound to a single computer.

If you’re planning to use the distributed KNIME Executors in production environments please get in touch with us directly for more information.

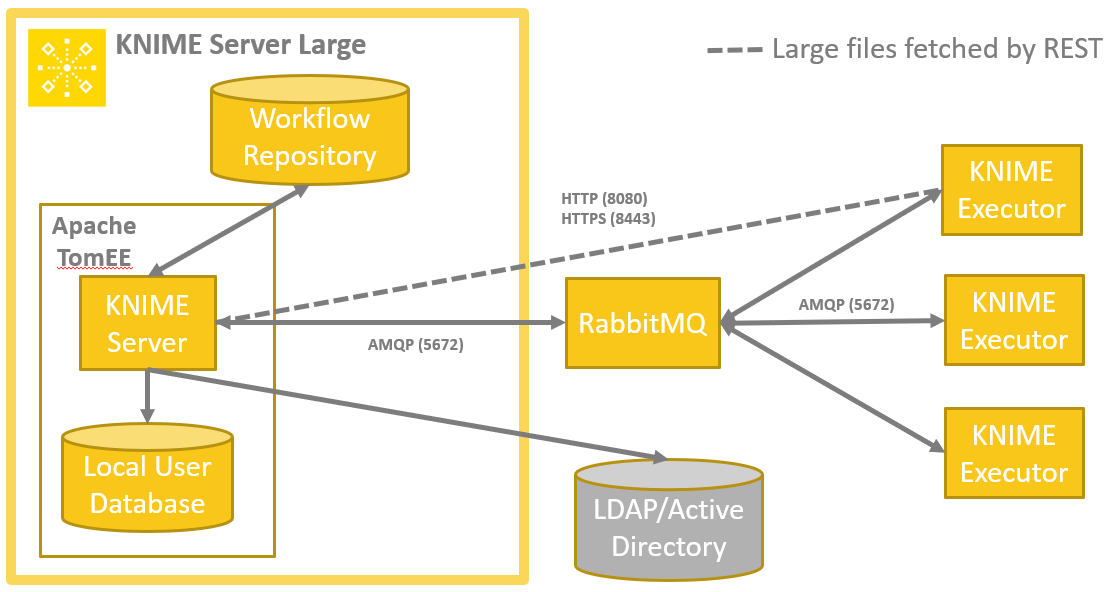

Installation, configuration, and operation is very similar to the single Executor setup. The server communicates with the Executors via a message queueing system (and HTTP(S)). We use RabbitMQ for this purpose, and it’s recommended, although not required, to install that on a separate machine as part of a highly available architecture.

# Distributed KNIME Executors: Installation instructions

Enabling KNIME Executors consists of the following steps:

- In case you haven’t installed KNIME Server already, please follow the KNIME Server Installation Guide (opens new window).

- Shut down the server if it has been started by the installer.

- Install RabbitMQ following the instructions below.

- Adjust configuration files for the server and Executor following the instructions below.

- Start the server and one or more Executors.

# Installing RabbitMQ

The server talks to the Executors via a message queueing system called RabbitMQ (opens new window). This is a standalone service that needs to be installed in addition to KNIME Server and the executors. You can install it on the same computer as KNIME Server or on any other computer directly reachable by both KNIME Server and the Executors.

KNIME Server requires RabbitMQ 3.6+ which can be installed according to the Get Started documentation on their web page (opens new window).

Make sure RabbitMQ is running, then perform the following steps:

- Enable the RabbitMQ management plug-in by following the online documentation (opens new window)

- Log into the RabbitMQ Management which is available at

http://localhost:15672/(with user guest and password guest if this is a standard installation). The management console can only be accessed from the host on which RabbitMQ is installed. - Got to the Admin tab and add a new user, e.g. knime.

- Also in the Admin tab add a new virtual host (select the virtual hosts section on the right), e.g. using the hostname on which KNIME Server is running or simply knime-server.

- Click on the newly created virtual host, go to the Permissions section and set permission for the new knime user (all to ".*" which is the default).

# Connecting Server and KNIME Executors

KNIME Server and the KNIME Executors now need to be configured to connect to the message queue.

For KNIME Server you must specify the address of RabbitMQ instead of the path to the local Executor installation in the knime-server.config. I.e. comment out the com.knime.server.executor.knime_exe option (with a hash sign) and add the option com.knime.enterprise.executor.msgq. The latter takes a URL to the RabbitMQ virtual host: amqp://:@/, e.g.

com.knime.enterprise.executor.msgq=amqp://<username>:<password>@rabbitmq-host/knime-server

Note that any special characters in the password must be URL encoded.

The same URL must also be provided to the Executor as system property via the knime.ini:

-Dcom.knime.enterprise.executor.msgq=amqp://<username>:<password>@rabbitmq-host/knime-server

Alternatively you can provide the message queue address as an environment variable:

KNIME_EXECUTOR_MSGQ=amqp://<username>:<password>@rabbitmq-host/knime-server

In case RabbitMQ High Available Queues (opens new window) are used, simply add additional : separated by commas to the initial amqp address (this is supported with KNIME Server 4.11.3 onward): | |

|---|---|

-Dcom.knime.enterprise.executor.msgq=amqp://<username>:<password>@rabbitmq-host/knime-server,amqp://<rabbitmq-host2>:<port2>,amqp://<rabbitmq-host3>:<port3>

In order to use RabbitMQ, you need to explicitly deactivate the embedded Qpid message broker by setting com.knime.enterprise.executor.embedded-broker=false in knime-server.config. Qpid does not support more than one KNIME Executor, and it doesn’t support Executors running on separate hosts. | |

|---|---|

While commands between the server and KNIME Executors are exchanged via the message queue, actual data (e.g. workflows to be loaded) are exchanged via HTTP(S). Therefore, the KNIME Executors must know where to reach the server. The server tries to auto-detect its own address however in certain cases this address is not reachable by the KNIME Executors or — in case of https connections — the hostname doesn’t match the certificate’s hostname. In such cases you have to specify the correct public address in the knime-server.config with the option com.knime.server.canonical-address, e.g.

com.knime.server.canonical-address=https://knime-server:8443/

You don’t have to specify the context path as this is reliably auto-detected. Now you can start the server.

The KNIME Executors must be started manually, the server does not start them. In order to start an Executor (on any machine) launch the KNIME application (that has been created by the installer) with the following arguments:

./knime -nosplash -consolelog -application com.knime.enterprise.slave.KNIME_REMOTE_APPLICATION

You can also add these arguments at the top of the knime.ini if the installation is only used as an Executor. You can start as many KNIME Executors as you like and they can run on different hosts. They will all connect to RabbitMQ (you can see them in the RabbitMQ Management in the Connections tab).

在外壳中启动Executor时,可以使用非常简单的命令行界面来控制Executor。help在Executor>提示符下输入以获取可用命令的列表。

在Windows上,将为执行器进程打开一个单独的窗口。如果在启动过程中出现问题(例如,执行器无法从服务器获取核心令牌),则此窗口将立即关闭。在这种情况下,您可以添加-noexit到上面的命令中以使其保持打开状态,并查看日志输出或打开日志文件(默认情况下为打开),/knime-workspace/.metadata/knime/knime.log 除非您使用提供了不同的工作区位置-data。

您可能会发现执行者使用KNIME服务器提供的自定义配置文件会有所帮助。在这种情况下,请参阅“文档”部分中的“自定义” (opens new window)。例如,编辑执行器的启动命令将应用executor配置文件。

./knime -nosplash -consolelog -profileLocation http:// knime-server:8080 / knime / rest / v4 / profiles / contents -profileList

执行器com.knime.enterprise.slave.KNIME_REMOTE_APPLICATION

# 将KNIME执行器作为服务运行

也可以将KNIME Executors作为服务在系统启动期间自动启动(并在关闭期间停止)。当不在docker部署上运行时,这是推荐使用的方法。

# 带有systemd的Linux

仅在使用systemd的Linux发行版 (例如Ubuntu> = 16.04,RHEL 7.x及其衍生版本)上支持将KNIME Executors作为服务运行。以下步骤假定您已安装KNIME Executor,其中包含KNIME executor安装 (opens new window) 一节中介绍的KNIME Executor连接器扩展。

复制整个文件夹

<knime安装> / systemd /到文件系统的根目录。该文件夹包含knime-executor的systemd服务描述和允许配置服务的替代文件(例如,文件系统位置或应在其中运行Executor的用户ID)。

跑

systemctl守护程序重新加载跑

systemctl编辑knime-executor.service在将打开的编辑器中调整设置,然后保存更改。确保

User此文件中指定的内容在系统上存在。否则,除非您的systemd版本支持,否则启动将失败DynamicUser。在这种情况下,将创建一个临时用户帐户。通过启用服务

systemctl启用knime-executor.service

# 视窗

在Windows上,可以使用NSSM(非吸吮服务管理器)将KNIME Executors作为Windows服务运行。以下步骤假定您具有KNIME Analytics Platform安装,其中包含KNIME Server安装指南 (opens new window)中所述的 KNIME Executor连接器扩展 。

编辑

<knime安装> /install-executor-as-service.batand adjust the variables at the top of the file to your needs.

Run this batch file as administrator. This will install the service.

Open the Windows Services application, look for the KNIME Executor service in the list and start it.

If you want to remove the Executor service again, run the following as administrator:

<knime-installation>/remove-executor-as-service.bat

Note that if you move the KNIME Executor installation you first have to remove the service before moving the installation and then re-create it.

# Load throttling

If too many jobs are sent to KNIME Executors this may overload them and all jobs running on that Executor will suffer and potentially even fail if there aren’t sufficient resources available any more (most notably memory). Therefore an Executor can reject new jobs based on its current load. By default an Executor will not accept new jobs any more if its memory usage is above 90% (Java heap memory, averaged over 1-minute) or the average system load is above 90% (averaged over 1-minute). These settings can be changed by two system properties in the Executor’s knime.ini file:

Some options can be set as property in the knime.ini file as well as by defining an environment variable (Env). The environment variable changes will only take effect after a restart of the KNIME Executor. If the environment variable for an option is set, the property in the 'knime.ini' file will be ignored.

-Dcom.knime.enterprise.executor.heapUsagePercentLimit=The average Heap space usage of the executor JVM over one minute. Default 90 percent Env: KNIME_EXECUTOR_HEAP_USAGE_PERCENT_LIMIT= |

|---|

-Dcom.knime.enterprise.executor.cpuUsagePercentLimit=The average CPU usage of the executor JVM over one minute. Default 90 percent. Env: KNIME_EXECUTOR_CPU_USAGE_PERCENT_LIMIT= |

If only one KNIME Executor is available it will accept every job despite the defined Heap space and CPU limits. With KNIME Server 4.9.0 and later an option to change this behavior has been added. For more information see the Automated Scaling (opens new window) section.

# Resource throttling

In some cases you may wish to restrict the access to the total available CPU cores/threads on the machine. Examples of when this may be desired are: when additional KNIME Executor cores on the machine must be reserved for another task, or in a local docker setup where containers detect all cores available on a machine. Both of these setups are typically not recommended as it can be difficult to guarantee good resource sharing, generally it’s better to run workloads on individual machines or isolated pods using Kubernetes.

/instance/org.knime.workbench.core/knime.maxThreads=This setting must be added to the preferences.epf file used by the Executor, or alternatively to the preference profile for the Executor. The number of threads that KNIME Executor will use to process workflows. In normal operation you do not need to set this preference. The Executor will auto-detect the number of cores available on the system, and set knime.maxThreads=2*num_cores. Typically the JVM will identify hyper-threaded cores as a 'core'. By default the Executor will use two times the numbers of cores available to the JVM.

# Automated Scaling

Currently we allow automated scaling by monitoring Executor heap space and CPU usage. It is also possible to blend these metrics using custom logic to invent custom scaling metrics. In some cases it may also be desirable to allow jobs to stack up on the queue and use the 'queue depth' as a fourth metric type. In order to do so, it is necessary to edit the knime.ini of the Executors.

-Dcom.knime.enterprise.executor.allowNoExecutors=com.knime.server.job.default_load_timeout and the com.knime.explorer.job.load_timeout in the Analytics Platform to ensure sensible behaviour. The default is false, which emulates the behaviour before the setting was added.

When using an automatic scaling setup, jobs that are waiting for an Executor to start, might run into timeouts. The default wait time for a job to be loaded by an Executor can be increased by setting the com.knime.server.job.default_load_timeout option in the server configuration as described in section Server configuration files and options (opens new window).

When starting jobs interactively using the Analytics Platform, the connection might also time out. The timeout can be increased by adding the following option to the knime.ini file of the KNIME Analytics Platform.

-Dcom.knime.explorer.job.load_timeout=Specifies the timeout to wait for the job to be loaded. The default duration is 5m.

Generally, the timeout in the Analytics Platform should be higher than the timeout set in the KNIME server. This prevents the interactive session from running into read timeouts.

# 重新连接到消息队列

万一与消息队列的连接丢失(例如,通过重新启动RabbitMQ),则从KNIME Server 4.11开始,执行程序将尝试重新连接到消息队列。可以在执行knime.ini程序的文件中调整以下选项:

-Dcom.knime.enterprise.executor.connection_retries=指定应尝试重新连接到消息队列的重试次数。在每次尝试之间,执行程序等待10秒。默认值设置为,9即执行器尝试重新连接90秒钟。请注意,也可以通过环境变量设置此选项KNIME_EXECUTOR_CONNECTION_RETRIES,该变量优先于knime.ini文件中设置的系统属性。对于number of retries小于0的重试次数是无限的。

# 工作池

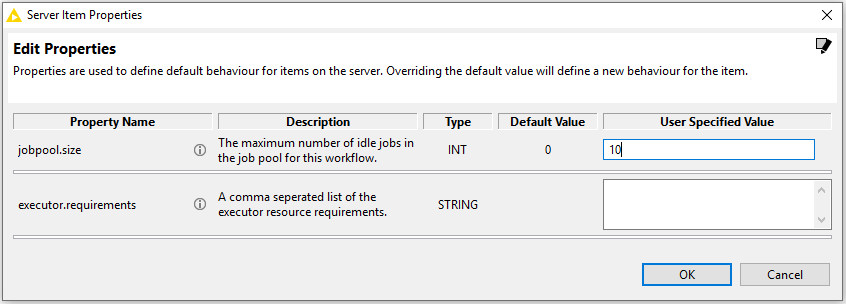

对于频繁执行的工作流程,现在(从KNIME Server 4.8.1开始)可以将来自该工作流程的一定数量的作业保留在内存中。这消除了首次使用该作业后在执行程序中加载工作流的开销。在作业加载时间比作业执行时间大的情况下,这应该特别有益。

# 启用工作池

为了启用作业池,必须在应合并的工作流上设置属性。可以在KNIME Explorer(从KNIME Server 4.9.0开始)中进行设置,方法是右键单击工作流程并选择'Properties ...'。将打开一个对话框,使用户可以查看和编辑工作流程的属性。

否则,也可以通过REST调用来设置工作流属性,例如,使用curl:

curl -X PUT -u <用户>:<密码> http:// <服务器地址> / knime / rest / v4 /存储库/ <工作流程>:properties?com.knime.enterprise.server.jobpool.size = <泳池面积>

这将为工作流工作流启用最多具有池大小作业的池。

仅在一次调用(即当前:execution资源)中进行加载,执行和丢弃的单次调用执行才有可能。客户端通过多个REST调用(加载,执行,重新执行,丢弃)执行的作业无法合并。

# 禁用工作池

可以通过在KNIME Explorer中或通过REST调用将作业池大小设置为0来禁用作业池:

curl -X PUT -u <用户>:<密码> http:// <服务器地址> / knime / rest / v4 /存储库/ <工作流程>:properties?com.knime.enterprise.server.jobpool.size = 0

# 使用工作池

为了利用池中的作业,必须调用特殊的REST资源来执行作业。不必呼唤,:execution您必须致电:job-pool。除此之外,这两个调用在语义和允许的参数方面是相同的。

执行合并的作业可能如下所示:

curl -u <用户>:<密码> http:// <服务器地址> / knime / rest / v4 /存储库/ <工作流>:作业池?p1 = v1&p2 = v2

This will call workflow passing v1 for input parameter p1 and v2 for input parameter p2. Calls using POST will work in a similar way using the :job-pool resource.

# Behaviour of job pools

Job pools exhibit a certain behaviour which is slightly different from executing a non-pooled job. Clients should be aware of those differences.

- If the pool is empty (either intially or if all pooled jobs are currently in use) the job will be loaded from the workflow and thus the call will take longer.

- A used job will be put back into the pool right after the result has been returned if the pool isn’t already full. Otherwise the job will be discarded.

- Pooled jobs are tied to the user that triggered initial loading of the job. A pooled job will never be shared among different users.

- If there is no job in the pool for the current user, the oldest job in the pool from a different user will be removed. This can lead to contention if there are more distinct users calling out to the pool than the pool size.

- Pooled jobs will be removed if they are unused for more than the configured job swap timeout (see the server configuration options (opens new window)).

- A pooled job without any input nodes will be reset before every invocation, even the first one! This is different from executing a non-pooled job but is required for consistent behaviour across multiple invocations. Otherwise the first and subsequent operations may behave differently if the workflow is saved with some executed nodes.

- In a pooled job with input nodes all of them will receive input values before execution: either the value that has been passed in the call, or if no explicit value has been provided its default value. This means that all input nodes will be reset prior to execution and not just the nodes explicitly set in the call. Again, this is different from executing a non-pooled job where only input nodes with explicitly provided values will be reset but required for consistency. Otherwise the results of a call may depend on the parameters passed in the previous call.

# Workflow Pinning

Workflow Pinning can be used to let workflows only be executed by a specified subset of the available KNIME Executors when distributed KNIME Executors (opens new window) are enabled.

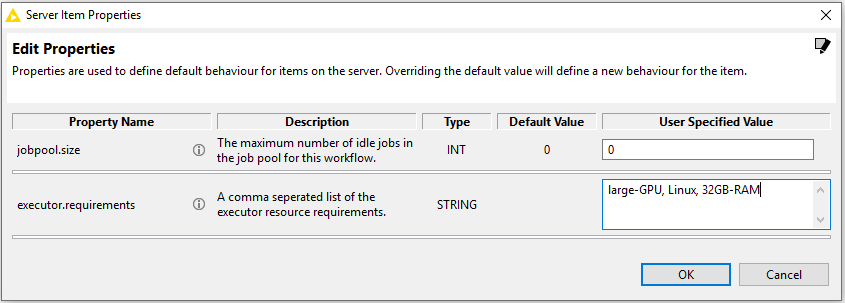

For workflows that need certain system requirements (e.g. specific hardware, like GPUs, or system environments, like Linux) it’s now possible (starting with KNIME Server 4.9.0) to define such Executor requirements per workflow. Only KNIME Executors that fulfill the Executor requirements will accept and execute the workflow job. To achieve this behaviour, a property has to be set for the workflows. Additionally, the system admin of the KNIME Executors has to specify a property for each Executor seperately. The properties consist of values that define the Executor requirements, set for a workflow, and Executor resources, set for an Executor, respectively.

# Prerequisites for workflow pinning

In order to use workflow pinning, the KNIME Server Distributed Executors (opens new window) must be enabled and RabbitMQ (opens new window) must be installed. Otherwise, the set Executor requirements are ignored.

# Setting executor.requirements property for a workflow

Executor requirements for a workflow can be defined by setting a property on the workflow. The Executor requirements are a simple comma-seperated list of user-defined values. Setting workflow properties can be done in the KNIME Explorer by right-clicking on a workflow and selecting 'Properties…'. A dialog will open that lets the user view and edit the properties of a workflow.

Alternatively, workflow properties can also be set via a REST call, e.g. using curl:

curl -X PUT -u <用户>:<密码> http:// <服务器地址> / knime / rest / v4 /存储库/ <工作流程>:properties?com.knime.enterprise.server.executor.requirements = <执行者要求>

这将为工作流工作流设置执行者要求执行者要求。

# 为执行者设置executor.resources属性

要定义执行者可以提供的资源,必须为执行者设置一个属性。这可以通过两种方式完成:

在执行器的系统上设置环境变量。变量的名称必须为“ KNIME_EXECUTOR_RESOURCES”,并且该值必须是用户定义值的逗号分隔列表。

KNIME_EXECUTOR_RESOURCES =值1,值2,值3在knime.ini文件中设置系统属性,该文件位于Executor的安装文件夹中。该文件包含执行器的配置设置,即Java虚拟机使用的选项。该属性的名称必须为“ com.knime.enterprise.executor.resources”,并且该值必须是用户定义值的逗号分隔列表。

-Dcom.knime.enterprise.executor.resources = value1,value2,value3

| 如果同时指定了环境变量和系统属性,则它们具有优先级。 | |

|---|---|

# 删除工作流程的executor.requirements属性

通过将属性设置为空字段可以删除执行器要求。可以在KNIME Explorer中或通过REST调用来完成:

curl -X PUT -u <用户>:<密码> http:// <服务器地址> / knime / rest / v4 /存储库/ <工作流程>:properties?com.knime.enterprise.server.executor.requirements =

# 删除执行者的executor.resources属性

可以通过完全删除环境变量或完全删除knime.ini文件中的属性来删除属性,具体取决于设置属性的方式。或者,也可以通过将环境变量的值或knime.ini文件中的属性的值保留为空来删除该属性。

| 必须重新启动执行程序才能应用更改。 | |

|---|---|

# 执行人要求的行为

执行者只有能够满足为工作流程定义的所有执行者要求,才接受工作。否则,它只会忽略作业。

没有执行者要求的工作将被所有可用的执行者接受。

executor.requirements属性值只需是Executor定义的executor.resources属性值的子集,工作流就可以被Executor接受以执行。

如果没有任何执行者可以满足执行者的要求,则排队的作业将被丢弃。

如果适当的执行器由于其负载太高而无法接受新作业,则新排队的作业将在超时(通常在60秒后)中运行并自行丢弃,请参阅负载限制 (opens new window)。

例: Workflow1执行程序。要求:medium_RAM,两个GPU,Linux Workflow2执行程序。要求:小型RAM,Linux Workflow3执行程序。要求: Executor1 executor.resources:小型RAM,Linux,两个GPU Executor2 executor.resources:medium_RAM,Windows,两个GPU 两个执行者都将忽略Workflow1,并将其丢弃。 工作流程2将被执行者2忽略,并被执行者1接受。 任何可用的执行者都将接受Workflow3。

# CPU和RAM要求

从KNIME Server 4.11开始,可以定义工作流程的CPU和RAM要求。默认情况下,这些要求都被忽略和残疾人,除非默认值中的至少一个com.knime.server.job.default_cpu_requirement或 com.knime.server.job.default_ram_requirement的 KNIME服务器配置文件的选项 (opens new window)设置。

# 设置工作流程的CPU和RAM需求属性

CPU and RAM requirements can be set in the same way as Executor requirements and is described in Setting executor.requirements property for a workflow (opens new window). To set the CPU and RAM requirements the following keywords have been introduced:

cpu=The number of cores needed to execute the workflow. Note, that this value also allows decimals with one decimal place (further decimal places are ignored) in case workflows are small and don’t need a whole core. The default is 0. |

|---|

ram=An integer describing the size of memory needed for execution. The following units are allowed: GB (Gigabyte) and MB (Megabyte). In case no unit is provided it is automatically assumed to be provided in megabytes. The default is 0MB |

In case no CPU or RAM requirement has been set for the workflow the default values com.knime.server.job.default_cpu_requirement and com.knime.server.job.default_ram_requirement defined in the KNIME Server configuration file (opens new window) are used. If both default values are either not set at all or set to 0 the CPU and RAM requirements of workflows are ignored.

# Setting CPU and RAM properties for a KNIME Executor

The Executor detects the available number of cores and the maximum assignable memory automatically at startup.

# Behaviour of CPU and RAM requirements

An Executor only accepts a job if it can fulfill the CPU and RAM requirements that were defined for the workflow. Otherwise, it will ignore the job. If a job gets accepted by an Executor its required CPU and RAM will be subtracted from the available resources until it gets either discarded/deleted or swapped back to KNIME Server. The time a job is kept on the Executor can be changed via the option com.knime.server.job.max_time_in_memory defined in the KNIME Server configuration file (opens new window).

Example:

Workflow1 executor.requirements: cpu=1, ram=16gb

Workflow2 executor.requirements: cpu=1, ram=8gb

Workflow3 executor.requirements: cpu=0.1, ram=512mb

Executor: number of cores: 4, available RAM: 32GB

Workflow1 can be executed 2 times in parallel, since RAM is limiting

Workflow2 can be executed 4 times in parallel, since CPU and RAM is limiting

由于CPU的限制,Workflow3可以并行执行40次

# 执行人预约

随着KNIME Server 4.11的发布,我们引入了保留KNIME执行器专用的可能性。这超出了现有的工作流程固定, (opens new window)因为除非满足某些要求,否则KNIME执行者现在可以拒绝接受作业。

在两个主要用例中,这可能会有所帮助:

- 根据工作流程要求保留执行程序:这可以确保具有某些属性(例如大内存,GPU)的执行程序仅接受标记为需要这些属性的作业。

- 基于单个用户或用户组的可用性要求的执行程序保留:这使您可以保证个人或组的执行资源的可用性。例如,您可以保留KNIME执行器,使其仅接受由特定组的用户发布的作业。

# 保留执行人的前提条件

为了使用Executor保留,需要与工作流固定相同的先决条件。 必须启用分布式KNIME执行器, (opens new window)并且必须安装RabbitMQ (opens new window)。在单执行程序部署中,保留将被忽略。

# 为KNIME执行器设置executor.reservation属性

为了定义工作必须满足哪些要求才能被执行者接受,必须为此执行者设置属性(除了定义执行者提供的用于固定工作流 (opens new window)的资源外)。这可以通过两种方式完成:

在执行器的系统上设置环境变量。变量的名称

KNIME_EXECUTOR_RESERVATION必须为并且值必须为Executor资源的有效布尔表达式。KNIME_EXECUTOR_RESERVATION =资源1 &&资源2 || 资源3在knime.ini文件中设置系统属性,该文件位于Executor的安装文件夹中。该文件包含执行器的配置设置,即Java虚拟机使用的选项。该属性的名称

com.knime.enterprise.executor.reservation必须为并且该值必须为Executor资源的有效布尔表达式。-Dcom.knime.enterprise.executor.reservation = resource1 && resource2 || 资源3

| 如果同时指定了环境变量和系统属性,则它们具有优先级。 | |

|---|---|

# 删除KNIME执行器的executor.reservation属性

可以通过删除环境变量或通过删除knime.ini文件中的属性来禁用该属性,具体取决于该属性的设置方式。或者,可以将环境变量或knime.ini中的属性值设置为空字符串。

| 必须重新启动执行程序才能应用更改。 | |

|---|---|

# 设置工作流程的执行程序保留属性

为各个工作流程设置执行程序保留规则的过程与固定 (opens new window)工作流程的过程相同 。也就是说,通过在KNIME Explorer中右键单击工作流程并打开``属性...''对话框来访问执行保留。

# 执行程序保留的语法和行为

The rule for Executor reservation is defined by a boolean expression and supports the following operations:

resource: valueA resource evaluates to true if and only if the job requirements contain the specified resource (see workflow pinning (opens new window)). |

|---|

&&: r1 && r2Logical AND evaluates to true if and only if r1 and r2 evaluate to true, otherwise evaluates to false. |

||: r1 || r2Logical OR evaluates to true if either r1 or r2 or both evaluate to true, otherwise evaluates to false. |

!: !rLogical negation evaluates to true if and only if r evaluates to false, otherwise evaluates to false. |

user: (user = )Evaluates to true if and only if the user loading the job is ``. Note that the parentheses are mandatory. |

group: (group = )Evaluates to true if and only if the user loading the job is in the specified group. Note that the parentheses are mandatory. |

Note: the usual operator precedence of logical operators applies, i.e. ! has a high precedence, && has a medium precedence and || has a low precedence. Additionally, you can use parentheses, to overcome this precedence, e.g.:

A && B || A && C = A && (B || C)

A KNIME Executor only accepts a job if

- the Executor can fulfill all requirments that the job has, and

- if the job’s resources requirements match the Executor’s reservation rule.

否则,该工作将被执行者拒绝。这也意味着,如果执行者的保留规则中至少定义了一种资源,则没有资源需求的作业将被拒绝。

保留规则中使用的资源应该是执行者提供的资源的子集,否则所有作业都可能会被拒绝,因为执行者将无法满足要求。

如果任何执行者不接受工作,则将其丢弃。如果有执行者愿意接受一项工作,但由于他们的负载太高而无法立即执行,那么新工作将进入超时状态(通常在60秒后)并放弃自身,请参阅负载限制 (opens new window)。

除以外的大多数特殊字符'都允许成为用户,组或资源的一部分。在这种情况下,用户名,组名和资源值必须放在之间',例如:

(用户='knime@knime.com')|| (group ='@ knime.com')&&'Python + Windows'

例:

- 工作流程所需的资源:

- w1要求

large_RAM, Linux - W2要求

large_RAM, GPU - W3要求

Linux - W4要求

Windows - w5不需要任何东西

- w1要求

- 执行官提供的资源和预订规则:

- e1提供

large_RAM, Linux, GPU并保留用于`large_RAM && (GPU || Linux)`` - e2提供

GPU, Windows并保留用于!Linux

- e1提供

- 可能的工作执行

- w1将被e2拒绝(因为e2被保留用于

!Linux),并将被e1接受。 - w2将被e2拒绝(因为e2不提供

large_RAM),并将被e1接受。 - w3将被两个KNIME执行器拒绝(因为e1为保留

large_RAM而e2为保留!Linux),并将被丢弃。 - w4将被e1拒绝(因为e1不提供

Windows)并被e2接受。 - w5将被e1拒绝(因为e1为保留

large_RAM),并被e2接受(因为空需求匹配!Linux)。

- w1将被e2拒绝(因为e2被保留用于

# 执行者团体

随着KNIME Server 4.11的发布,我们引入了将KNIME执行器分组以供专用的可能性。 由于将作业分配到与其需求相匹配的指定KNIME执行器组,因此扩展了执行器保留 (opens new window)。

这可能有用的主要用例是允许您确保具有特定属性(例如大内存,GPU)或基于特定用户和组的作业仅由特定的KNIME执行器组处理。由于只有可能匹配的KNIME执行程序会看到该消息,因此减少了接任务的潜在延迟。此外,它还允许您将KNIME执行器划分为多个逻辑组,以便于维护(例如,涉及扩展)。

# KNIME执行器组的先决条件

为了使用KNIME执行器组,需要与工作流固定相同的先决条件。 必须启用分布式KNIME执行器, (opens new window)并且必须安装RabbitMQ (opens new window)。在单执行程序部署中,将忽略组。

# 创建KNIME执行器组

To define KNIME Executor Groups the following options have to be set in the KNIME Server configuration file (opens new window):

com.knime.enterprise.executor.msgq.names=,,…Defines the names of the KNIME Executor Groups. The number of names must match the number of rules defined with com.knime.enterprise.executor.msgq.rules. Note, that names starting with amqp. are reserved for RabbitMQ. |

|---|

com.knime.enterprise.executor.msgq.rules=,,…Defines the exclusivity rules of the KNIME Executor Groups. The number of rules must match the number of rules defined with com.knime.enterprise.executor.msgq.names. |

# Assigning KNIME Executors to a group

There are the following two ways to assign an Executor to a group.

Setting an environment variable on the system of a KNIME Executor. The name of the variable has to be

KNIME_EXECUTOR_GROUPand the value must be one of the names defined incom.knime.enterprise.executor.msgq.names.KNIME_EXECUTOR_GROUP=DefaultGroupSetting a system property in the knime.ini file, which is located in the installation folder of the Executor. The file contains the configuration settings of the Executor, i.e. options used by the Java Virtual Machine. The name of the property has to be

com.knime.enterprise.executor.groupand the value must be one of the names defined incom.knime.enterprise.executor.msgq.names.-Dcom.knime.enterprise.executor.group=DefaultGroup

| The environment variable has priority over the system property if both are specified. | |

|---|---|

In addition, it is necessary to also specify the resources that are offered by an Executor. The process is the same as described for workflow pinning (opens new window). The list needs to contain at least all elements that are needed to distinguish the Executors within their group (except for rules based on user and/or group membership).

# Setting Executor group properties for a workflow

Setting the KNIME Executor Groups for individual workflows uses the same procedure as for workflow pinning (opens new window). I.e., execution reservation is accessed by right-clicking a workflow in the KNIME Explorer and opening the 'Properties…' dialog.

# Syntax and behaviour of KNIME Executor Groups

The rules for KNIME Executor Groups are defined the same way as for executor reservation (opens new window) with the exception that a group with an empty rule accepts every job. KNIME Server sets up new message queues in RabbitMQ according to the provided groups.

If a workflow is loaded its requirements are considered and matched with the first workflow group for which the job fulfills the rules. Hence, the order of the groups in com.knime.enterprise.executor.msgq.rules may have an impact on which group gets selected. In case no suitable group can be found an error is thrown. Once a job is loaded it is associated with a single selected KNIME Executor Group.

| While Executor reservations are not necessary, the KNIME Executors still have to fulfill the requirements according to workflow pinning (opens new window). | |

|---|---|

Example:

- Resources required by workflows:

- w1 requires

Python, GPU, group=G1 - w2 requires

Python, GPU - w3 requires

Python, Linux - w4 requires

Python, Windows - w5 requires nothing

- w6 requires

huge_RAM

- w1 requires

- Executors groups with the rules:

- eg1 is reserved for

('user=U1' || 'group=G1') && Python && GPU - eg2 is reserved for

Python || GPU - eg3 is reserved for